There are always new toys in the world of technology, and people who either make these new toys, or have decided to be on their team, are ever telling us, the creators and disseminators of information, how their pet concept is the new game-changer in town. There is usually some truth in these claims, often none, but most likely it is the sleight of hand of making a new story out of old news that has only now become possible to present, package or implement in a certain way. Renamed old-wine, obvious next steps and possible side branches of information technology get touted as a revolution, and the vast majority of the herd follow with ignorant gusto. The very same can be seen at present to be happening with the idea of Apps and The Cloud.

Apps, Clouds, and other marketing beasts

Apps might sound like an esoteric futuristic technology, but all the word stands for is applications, which is nothing but a piece of software. In the old days, application software was a specific category of software, which included anything used for a specific application or had some purpose beyond computers. Databases, word processors and similar useful tools were all applications. Now everything is suddenly an application, or at least an app, and somehow this is meant to be new and special, because the marketing people told us so.

The cloud has been an alternative name for the internet and networked systems for decades, but since we now have mobile apps which can run on phones and web apps which can run through the browser, the internet just doesn’t sound sexy enough any more, so we have the cloud. Now nothing is truly cool unless it’s in the cloud, which has come to mean vaguely that it is stored and functions away from your personal computer or other device, and works off the internet, with multiple servers and data sources, and is always on and accessible from everywhere … like the internet has always been. But it’s those marketing folks again, so I wouldn’t argue.

Chasing the cool crowd

The idea of remote processing and storage is as old as the client server model itself, but with the increase in mobile and internet access in the world, all those ideas of software as a service and cloud computing are at last available for a larger audience to enjoy the benefits of. It is now worthy of mention.

Of course, it all comes down to chasing the cool crowd in this story, because even within the strict meaning of these terms as used today, apps and cloud computing existed a long time ago, but they were technical and practical and there wasn’t a poster child whose image you could flog between them. Fairly old phones already had Java applets or add-on software, everything from games to strange utilities. What didn’t exist was the iPhone and the iPhone market place. That made it cool, and suddenly it was a new trend.

On the web, the descent of terms like web apps into common usage, and the acceptance and rousing support for the idea of cloud backup and computing can be ascribed in no small part to a new wave of internet mash-up technologies that made these things accessible to a less technical crowd, and they got the right amount of clout behind them from popular sources. Getting Real, a book on their philosophy of creating web apps by 37signals was quite pivotal in the rush by everyone and their dog to set up a web based service app. I have read Getting Real. I highly recommend it if you are interested in the creation of web based software or any software for that matter. It is a book with many useful ideas and it can be read in its entirety online at their site. But while 37signals had a very well thought-out philosophy in their book and knew what they were talking about, most that followed did so because it was the next great thing. Then there came services like Twitter which are built on the Ruby on Rails framework as used by 37signals, and cloud computing now had poster children of its own and another next-big-thing was born.

New Twitter layout

Twitter started as a deceptively simple idea. It was born of a time before web apps, or even smart phones, because Twitter was designed to be a gateway between the mobile SMS system and the web. Why not give anyone with a mobile phone the ability to send updates to an online microblog of sorts. That was the idea and so to fit within the 160 character SMS limit, the now famous 140 character Twitter limit was born.

In the beginning, Twitter was very much a result of the minimalist Getting Real school of thought. Functionality and features were kept to the bare minimum, the interface was functional and rudimentary, and there were no bells and whistles to speak of, other than the magic of activating your mobile number through the service and being able to send an SMS and have it magically appear on your Twitter profile. A marvel of simplicity.

In time, Twitter became much more than it had bargained for and grew a social ecosystem of its own. To work around the patent simplicity of a line of text, 140 characters long, and no other features, the users came up with their own tricks to augment what it could do. Hash tags allowed people to insert subject keywords, which have by this point of time been integrated into Twitter’s search system and have birthed their own brand of humour and interaction. URL shortening services became a sub-industry all on their own as did the market for support services like image hosting, video hosting, and custom application to access Twitter through other devices and systems.

It was with these custom Twitter applications that part of the problem started, because they began to be immensely popular for augmenting its capabilities with various access and usability enhancements. Large numbers of people came to know Twitter not as the original creators intended, but through the windows of varied desktop and mobile application software on a variety of devices and non-web-browser interfaces.

Granted, the original mobile nature of Twitter’s concept gave it a mildly real-time bias, but that aspect of the service wasn’t integrated into the site’s layout and interface from day one. Initially your Twitter feed behaved very much like a blog page, only with the entry box on top for new posts. At the bottom of the page you still had standard next and previous links to navigate through your stream of posts. This was also implemented in a standard URL query way and so you could quite easily skip to page 10, or something similar, by entering the appropriate address, if you so wished. All of this still maintained most website conventions and worked perfectly as an in-browser experience.

Over time a lot changed. Live Ajax updating of the time-line was introduced which warned you of how many new posts were now available, and a simple click would load the already pre-loaded posts (with a few visual warning tricks taken from the good old 37signals). For me the change that made things turn in a wrong direction was the live update at the bottom of the page, with a ‘more’ button that live-loaded the next page of content when clicked. No URL access for other pages, all gone, to be replaced by this very real-time centric view of the time-line that you were stuck with. Catching up with older stuff was a chore, and finding ancient things near impossible.

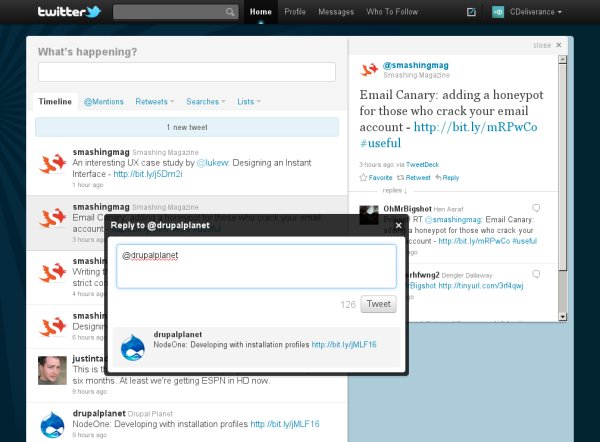

All this time, the mobile and smart-phone usage was probably growing and rivalling the use of their web interface so in some ways it was inevitable that they’d want to rethink the whole thing, and they did when they released the new Twitter interface last year to user testing. The new layout is still a beta of sorts and the old interface can still be chosen, as I do at most times. The new layout does clean up access to the various streams of Twitter data, such as mentions and retweets, using live-loading tabs, and it has page-overlays for posting replies and such, but the basic change can be described simply as “lets make our website look like a phone app.” All the curvy roundness is there, the slow loading-symbols instead of simply going to new pages, the slide-out panels. All of it is clearly trying to make the in-browser user experience more like the mobile experience and I for one don’t know why I should be forced to use a website like a phone when I choose to use a computer.

This appification of Twitter will be complete when the new layout becomes mandatory, although the long trial phase tells me the transition for most people hasn’t been smooth and there are probably a significant number of hold outs like myself who in some ways prefer the old school interface. There have been other more technical changes too. Along with this need to integrate the look of the different interfaces people commonly use Twitter with, there has been a move to restrict the openness of the API. Twitter got very big very quickly, enough to strain even the best of infrastructures, but even with that allowance, Twitter suffers more regular outages, whether in a broad way or a localised way, than almost anything else of a similar stature. Part of the problem was the various ways in which Twitter data could be accessed by so many and how much they could strain Twitter’s infrastructure without ever visiting the site.

To counter this, and also in a large part to cash in on this snowballing juggernaut that still doesn’t quite have a business model, Twitter has over the past year severely restricted API access to data, including stricter limits on number of requests served per hour, and also in restricting access to profile stream data to the last 3200 tweets. Users themselves can’t access anything older than the last 3200 tweets on their own stream, unless they have direct link URLs to all their older tweets. There are, of course, Twitter backup services and even self-hosted solutions like ThinkUp or Tweet Nest, which create a great accessible archive of your Tweets, if you have your own web space to play with, but they don’t solve the problem if you already have a large backlog of material on Twitter that exceeds the API limitations. The real-time centric view is further narrowed and users can’t even access their own posts any more. A strange development in a world where even someone as inherently closed as Facebook now offers a way to download most of your personal data in an archive file.

This makes no difference to most of the casual users of Twitter, but the service shouldn’t forget that by choosing this very short-term-content view of things, they are simply depending on the size of their user base for their continued survival, and they’re alienating the minority who actually end up producing the majority of real original content on Twitter which simply cannot be accessed in any sensible way beyond a certain time-frame. The appification of the interface is almost complete, the inability to save data locally through some sort of export forces Twitter users to depend on their data being out there in the cloud, and yet the cloud won’t let you find your own old data. A better example for the ridiculous blind belief in the wonders of the cloud over prudence I cannot think of.

I only chose Twitter as an example of this obsession with apps and the cloud because it’s an example I am familiar with on a day-to-day basis. However, there are signs of similar, often mis-informed, moves everywhere in our trend-following existence. Some examples don’t even have anything to do with the internet.

Digitisation of mall maps

Dubai has an abundance of one resource and one resource alone and that is the shopping mall. For a population of a few million, we have tons of them, small ones, big ones, biggest ones, strange ones, indoor ski-slope ones, it’s all here. A very common sight in malls has always been the public map displays, an essential resource, especially as malls have gotten more gargantuan and sprawling; Occasional visitors can barely keep track of where they are in them, leave alone where they are supposed to be headed to get where they want to go. So the large back-lit mall map in its own free-standing station, at strategic points in the mall, has become the centre of many a congregation of random strangers all crowded around the light, figuring out their next shopping chess move.

Recently I was at a mall which always had these maps. This was a place that needed them desperately considering its very un-rectangular nature, but the large back-lit map stands were gone. They had been replaced by little stubby information kiosks with illuminated perspex displaying an i symbol over a lit computer screen. Gone was the simplicity of walking up to the map and figuring out where you are and what you were looking for at a glance. Now you had to use one of those always-sluggish touch-screen interfaces to navigate what is essentially a glorified website, to search for the name of a shop and be directed to it with unnecessarily animated graphics and maps. The mall map had been appified.

This was not the first mall to do it. There were others, newer ones built over the past few years had started out with only digital location maps and touch screen interfaces. I’ve tried using all of these and frankly, I think they still have a lot of catching up to do with the paper version. They are slow, clunky, only designed for those knowing what they are looking for, and they completely get rid of any notion of making serendipitous connections, whether in finding locations or with the other fellow lost people crowded around the communal map. Touch screens are for one person, paper maps are for a crowd. The mall experience has been removed from a social space and been shoved into a solitary computer terminal. I think it’s a horrible idea.

To top it off, I’m sure the new digital maps are in the cloud too; Probably hosted in some crucial central server in the mall so that changes can be made centrally and the terminals only access the screens remotely via networking. A fine idea in principle but considering malls do not change every day, and these digital systems are constantly breaking down, becoming unresponsive, or frustrating the less electronically inclined, what exactly is the point of the fanciness? As far as I can see, it’s all in the lame attempt to have that mental image of people interacting with shiny digital interfaces in public spaces fulfilled, so that we can say we live in the science fiction future. When a large sheet of paper is winning out in practicality over our new best ideas, there is something wrong. Making everything look like it was meant to work on a phone and swish around in indulgent animated fervour can’t possibly be our idea of progress in the realms of presenting and allowing access to essential information.

All things must come to an end

The worst part about these societal technical infatuations is not even that they are inferior to older solutions, less useful, less reliable, less efficient, or down right stupid, in most cases; The worst part is that like all things, these trends and the technologies they rely on will die eventually. When that happens, a lot is lost in the process. Gone are the days of finding old shoe boxes of people’s stuff at garage sales, most of our digital stuff today has trouble surviving till next Tuesday, no matter how many fancy terms we can label them with. The cloud is not the solution to everything and is not a guarantee of the survival of data. Accidents happen, redundancies fail, and more importantly, companies go out of business and services are folded. Half of the big online email providers from the late 90s don’t exist any more, nor do extremely popular web hosting services like Geocities. How much is lost every time that happens, and how many people don’t even know or realise what is flushed into oblivion? Consider that and then talk to me about the cloud.

It’s getting more manageable in some cases, because in addition to multiple redundancies within the service, there are now third-party services that help you back up a lot of your online data. Services such as Backupify are worth looking into as safeguards against the loss of your valuable material spread around the net. Ironically though, they also use Amazon’s cloud infrastructure for their backup, as do some of the services you’re trying to backup, I imagine, and that brings into focus the problem here. You cannot depend on one platform and set of technologies to save you from disaster and in the case of information, major disasters aren’t required to lose things, minor mistakes are enough.

Use the cloud, but also use your brain and backup important things in as many mediums as possible locally, as well as remotely. Everyone touts the use of remote backup and the cloud as being true redundancy and mentions horror stories about fire and natural disaster. But the point is, how many people you know have had a house fire? Probably very few. How many people do you know whose large storage drive has randomly died on them, taking all data with it? Quite a few, I’m sure. You can’t prove something is worth it by using extreme scenarios, when the more likely scenarios aren’t covered. Don’t just rely on the cloud, rely on the cloud and hard drive backups, and optical disc backups, and when we graduate to holographic super nano-technology whizz-bang backups in the future, make sure you get that too. If your data is important, it’s worth the extra investment of time and money. In most cases, the investment will be lower than trying to store and retrieve gigabytes of data from the cloud on increasingly metered connections.

With the appification of everything, the losses will be less dramatic. Thus far, human knowledge has been stored in fairly universally accessible and translatable media. Say what you may about the death of the book, but it has lasted us a good while and old rotting 300-year old ones can still be scanned carefully to save most of what they contain. We are not going to be as lucky with digital technology. Sure there are open formats like standard databases, HTML websites and the new EPUB digital book formats and similar, but in this need to make everything an app and a slick interface rather than an efficient store of information, there will be an increasing incidence of things being created as apps rather than being converted to apps from more open mediums. Those apps will eventually die, or will be lost because they just don’t work any more on the hardware and systems available. That is a real and long term problem, beyond the simple fact that most of these fancy app interfaces range from truly ugly to mostly impractical, and are designed by us consulting what we think would look cool rather than what we know can work best.

Use apps and their conventions if your purpose will be served by them, speed up your web projects by using the new and now widely available cloud infrastructures to boost their performance, do all of that, but don’t be sold on the universality of these solutions and the fact that they are the future. They are simply the present, and most likely a fleeting present in a series of fleeting present technologies that have a brief heyday in the larger scheme of things. Whatever you do, don’t be caught with your apps down and your head in the cloud.